Artificial Intelligence is no longer a buzzword in cybersecurity—it’s an active player in both defending and breaching digital frontiers. But has it truly revolutionized the field, or are we still exploring its real potential?

Artificial Intelligence is reshaping cybersecurity at a rapid pace—but is it truly the breakthrough many claim, or are we still just scratching the surface?

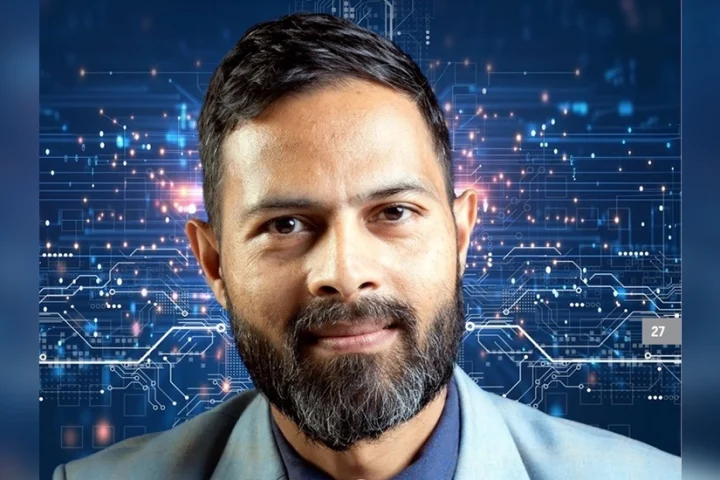

“Given the nature of cyber resilience—threat detection, incident response, and risk management—cybersecurity was one of the first domains to adopt AI at scale,” says David Boast, General Manager – UAE & KSA, Endava. While AI features have been advertised for years, only recently have real-world deployments accelerated.

In regions like the UAE and Saudi Arabia—where digital transformation is a national priority—cybersecurity has shifted from a compliance-driven task to a core business enabler. AI plays a central role in that evolution.

“From threat detection to response orchestration, it enables security teams to act with greater speed, context, and confidence,” Boast adds. Still, it only becomes a game-changer when applied intentionally—with quality data, defined thresholds, and strong governance.

AI for both sides of the battle

Norbert Biro, Senior Security Researcher at Acronis, agrees: AI is already a game-changer, particularly in threat prediction, automated detection, and faster response times. But there’s a catch—bad actors are leveraging it too.

Tools like WormGPT and FraudGPT allow attackers to automate phishing campaigns, malware, and social engineering. In short: as defenders get smarter, so do threats.

Inside today’s AI toolset

What AI techniques are being used in real-world cyber defense?

Boast outlines a blend of supervised and unsupervised machine learning, helping detect both known and unknown risks. “Behavioral analytics is especially advanced in sectors like healthcare and finance,” he explains. These systems learn what normal user behavior looks like—and flag deviations in real time.

Natural Language Processing (NLP) is also gaining traction in parsing phishing content, analysing communications for insider threats, and scanning threat intel sources, helping organisations respond faster and more accurately.

From reactive to predictive

Norbert from Acronis says traditional defenses rely on known patterns and static rules. AI, in contrast, excels at learning the behavior of users, systems, networks, and flagging deviations in real time. This allows it to detect sophisticated or emerging threats that don’t match existing signatures, such as insider threats or zero-day exploits. AI also automates analysis across massive datasets, dramatically reducing detection time and freeing up human analysts to focus on strategic issues, reducing dwell time and improving overall response.

Staying one step ahead

“Defending against AI-driven attacks means investing in smarter, AI-powered tools,” says Faisal Khan, Acting Associate Director – Information Security & Compliance, Dubai World Trade Center.

But tech alone isn’t enough.

He says organizations must continuously training these models with updated threat intelligence. Human vigilance remains key whereby regular training and awareness programs can help employees recognize AI-generated social engineering attempts. Additionally, fostering collaboration between cybersecurity teams, data scientists, and threat intelligence units will enhance our ability to anticipate and mitigate emerging threats. Regulatory compliance, ethical AI usage, and continuous red teaming exercises should complement these efforts. Staying ahead means not only matching the sophistication of attackers but outpacing them with innovation, adaptability, and resilience.

Roland Daccache, Senior Manager – Sales Engineering at CrowdStrike MEA, says to stay ahead and stop the breach, organisations need a true security platform, natively built with AI at the core together with human expertise and oversight to protect critical assets from the endpoint and beyond.

“By investing in a single AI-native platform, enterprises can break down operational silos, reduce complexity and lessen cost to eliminate the security risks introduced by legacy point solutions. Through the use of AI-native platforms.”

Are autonomous cyber defenses close?

Padam Sundar Kafle, Head of Operational Technology and Innovation, Aster Hospitals UAE & Oman, says we are rapidly progressing toward semi-autonomous cyber defense ecosystems, but full autonomy—where AI independently detects, interprets, and mitigates threats without human intervention—remains a few steps away. In healthcare, where patient safety is paramount, the stakes are higher. At Aster Hospitals, we already leverage AI for predictive threat modeling, anomaly detection, and behavioral analytics to anticipate cyber risks before they materialize.

“However, true autonomy will require not only more advanced machine reasoning but also trusted explainability, regulatory alignment, and ethical guardrails. The convergence of AI with Operational Technology (OT) security, particularly in hospitals, makes autonomous defense both a necessity and a challenge,” he says.

While AI can handle scale and speed far beyond human capability, the “human-in-the-loop” remains critical—especially in high-sensitivity sectors like healthcare.

Filtering the noise

Norbert says AI systems improve accuracy by layering behavioral context onto alerts. Instead of flagging every anomaly, they assess multiple dimensions, such as time of access, volume of data moved, or role-based activity patterns to determine the likelihood of malicious intent. As models continue to learn from feedback and outcomes, their precision improves. That said, AI isn’t infallible. Human analysts are still needed to interpret edge cases, reduce bias, and weigh business impact. The real value comes from the partnership; AI accelerates detection, but humans validate and act.

Catching zero-day threats

Boast from Endava says In certain scenarios—and especially when behavioural analytics and dark web intelligence are in play—it can.

In the UAE and Saudi Arabia, where digital transformation is accelerating, attackers are getting more sophisticated. AI models trained on behavioural baselines can detect subtle anomalies that slip past rules-based systems, while NLP-powered tools scan the dark web for early signs of emerging threats. A notable example is Resecurity’s AI-led dark web monitoring in Saudi Arabia, which helps sectors like defence and aerospace spot targeted threats early. Combined with internal telemetry, this gives security teams a valuable head start.

But AI isn’t a silver bullet. Its impact depends on smart integration, strong governance, and human oversight. The best defence today? Hybrid models—AI for speed and scale, humans for judgement. As GCC regulators formalise responsible AI use, expect even broader adoption across critical sectors.

Know the limits

Padm says AI excels at rapid detection, pattern recognition, and even decision-making under predefined rules. But its limitations become evident in ambiguous scenarios requiring contextual judgment, ethical considerations, or cross-domain reasoning. In incident response, over-reliance on AI could lead to false positives, overlooked zero-day threats, or inappropriate automated actions—especially in complex OT environments like hospitals, where system availability can directly impact human lives.

Looking ahead: The AI horizon

“In the next 2–3 years, AI will become indispensable in cybersecurity,” predicts Faisal.

As cyber threats grow more sophisticated, AI-driven tools will enhance real-time monitoring, anomaly detection, and response capabilities, helping security teams stay ahead of emerging risks. AI will also improve threat intelligence by correlating vast amounts of data across sources to identify patterns and potential breaches earlier, he says.

Roland from CrowdStrike, wraps it up: “In the next 2–3 years, AI will move from assistive to truly agentic – taking on more complex, multi-step tasks with minimal input. But success won’t come from raw automation, it’ll come from pairing AI with the right data, the right workflows and the right human oversight. The future of cybersecurity isn’t AI versus humans – it’s AI empowering humans to stop breaches faster, with greater precision and less effort.”