Janus Henderson Investors portfolio manager, Richard Clode shares key insights from NVIDIA’s latest quarterly earnings call.

AI tokens (the units of data processed by AI models during training and inference) are the new oil, given the recent AI chip deals announced by President Trump and Jensen Huang in Saudi Arabia and the UAE.

Microsoft also disclosed it had 100 trillion tokens generated in Q1 25, up 5x year-on-year, and 50 trillion tokens processed in March. Google, meanwhile, at their I/O event last week revealed it is now processing over 480 trillion tokens per month, up 50x year-on-year.

NVIDIA asserted that token generation has surged tenfold over the past year. This is a very important inflection for a number of reasons. The market has been debating the use cases of AI, with token generation being a key metric of usage as well as intelligence. This surge also highlights the misconceptions from the ‘DeepSeek moment’ earlier this year when the market overly focused on the lower AI capex required to train the model, and ignored the significantly greater compute intensity of the reasoning model DeepSeek released. According to NVIDIA reasoning models can be 100x to 1000x more compute intensive than a single shot chatbot query given the model is ‘thinking’, exploring multiple pathways to solve a more complex query and checking its answer. That also generates exponentially more tokens, while also enabling new use cases from that greater intelligence, be it agentic AI for consumers or enterprises, or physical AI-powered autonomous vehicles and humanoids. AI innovation is proliferating AI use cases providing credible return on investment and more sustainable AI infrastructure spending due to that return. This is supporting the expanding usage of AI via inferencing rather than just throwing more compute at training up ever larger frontier AI models.

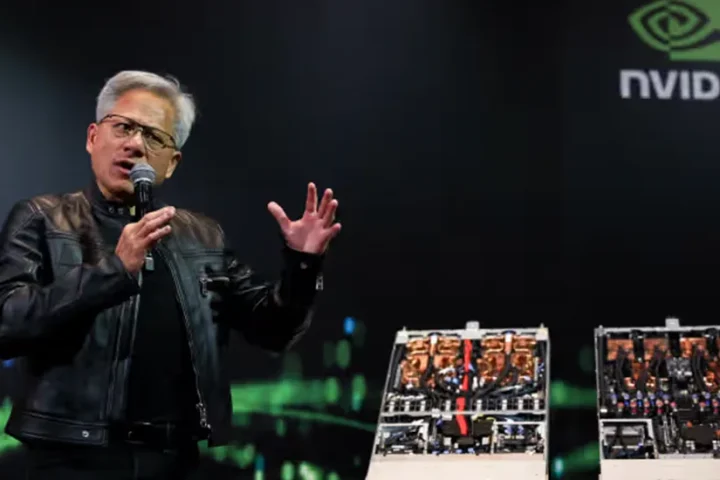

Blackwell built for a reasoning world

In the same way the Hopper chip was specifically designed to train large language transformer models, NVIDIA specifically designed its Blackwell chip to meet the performance demands of next generation reasoning models. Interacting with a reasoning model and ensuring a reasonable quality of service, as well as avoiding prohibitive cost or power consumption required NVIDIA to design at the rack level for the first time ─ the NVL72 weighs in at two tonnes with 1.2 million components. The result is 40x the inferencing performance of Hopper, bringing next generation AI infrastructure into the AI reasoning world, answering many questions from last year around the relative merits of ASICs vs GPUs.

The ramp up of Blackwell has been far from serene with a re-spin of the underlying chip at TSMC late last year, plus recent board and yield issues with the GB200 racks. However, these issues appear to be finally in the rear-view mirror, allowing the market to look forward to a potential significant ramp up in rack supply through the rest of the year. NVIDIA talked about hyperscalers now deploying on average nearly 1,000 NVL 72 racks per week, or 72,000 Blackwell GPUs per week. Blackwell Ultra GB300 racks are also now sampling and with the same physical footprint and rack design as the GB200, this could result in a much smoother supply ramp from H2 2025 to meet burgeoning inferencing demand with around 50% greater performance.

Geopolitics and deglobalisation implications

AI is at the front line of geopolitics and superpower supremacy. Following the H20 ban, we appear close to an end game for NVIDIA’s datacentre business in China, but Jensen Huang continued to make an impassioned plea that these restrictions are misguided. This is because they will not stop China from having AI or AI chips, they already have both. A superior strategy would be to ensure one of the world’s largest AI markets with half the world’s AI developers is built on US platforms and infrastructure.

NVIDIA continues to explore options to supply China with a further degraded AI performance chip, but it remains to be seen whether that will be allowed or whether those chips can be competitive. On a more positive note, the rescinding of the Biden administration’s AI Diffusion Rule that was meant to go into effect on 15 May enabled the recent deals in Saudi Arabia and the UAE. NVIDIA also clarified that while Singapore contributes a significant portion of its sales, the vast majority of that AI compute ends up with US-based customers, refuting recent reports of diversion of chips to circumvent export restrictions.

Finally, NVIDIA confirmed that in the space of a year it will be producing AI chips from TSMC fabs in Arizona, and assembling AI supercomputers in factories in Texas. NVIDIA’s support of President Trump’s aim of building manufacturing capacity in the US continues the deglobalisation of supply chains we have witnessed post-pandemic in the face of geopolitics, trade wars and demographics.

Missing the woods for the trees

While the markets typically look to NVIDIA’s earnings releases as a bellwether for the tech sector and geopolitical signals given its dominant role in the AI wave, we believe the short-term focus risks missing the wood for the trees. As AI continues to innovate with reasoning models, agentic and physical AI are creating compelling use cases and monetisation models. This is driving a pivot in AI infrastructure demand from training clusters to inferencing to support an inflection in token generation as reasoning models and their usage proliferate, thus creating a longer, larger and more sustainable runway for growth in the tech sector and the global economy.