Dynatrace announced new AI-powered data observability capabilities for its analytics and automation platform. With Dynatrace Data Observability, teams can confidently rely on all observability, security, and business events data in Dynatrace to fuel the platform’s Davis AI engine to help eliminate false positives and deliver trustworthy business analytics and reliable automations. Dynatrace Data Observability enables business analytics, data science, DevOps, SRE, security, and other teams to help ensure all data in the Dynatrace platform is high quality.

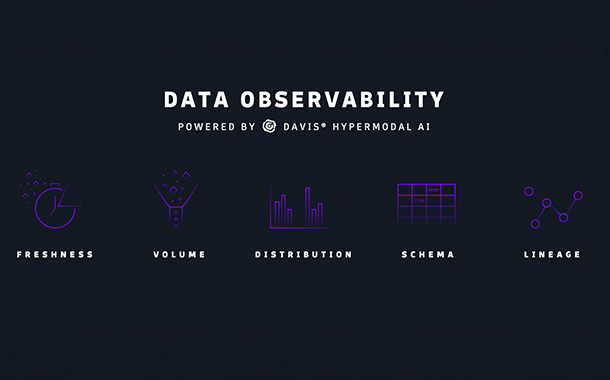

This complements the platform’s existing data cleansing and enrichment capabilities provided by Dynatrace OneAgent to help ensure high quality for data collected via other external sources, including open source standards, such as OpenTelemetry, and custom instrumentation, such as logs and Dynatrace APIs. It enables teams to track the freshness, volume, distribution, schema, lineage, and availability of these externally sourced data, thereby reducing or eliminating the need for additional data cleansing tools.

“Dynatrace, with its OneAgent technology, provides us with a high level of confidence that the data powering our analytics and automation is healthy.

The platform is also very flexible, which enables us to tap into custom data sources and open standards, like OpenTelemetry,” said Kulvir Gahunia, Director, Site Reliability Office at TELUS. “New Dynatrace data observability capabilities will help ensure the data from these custom sources is also high-quality fuel for our analytics and automation. This will save us from having to cleanse the data manually and reduce the need for additional data cleansing tools.”

High-quality data is critical for organizations that rely on it to inform business and product strategies, optimize and automate processes, and drive continuous improvements. However, the scale and complexity of data from modern cloud ecosystems, combined with the increased use of open source solutions, open APIs, and other customized instrumentation, make it hard to achieve this goal.

By adopting data observability techniques, organizations can improve data availability, reliability, and quality throughout the data lifecycle, from ingestion to analytics and automation. According to Gartner, “by 2026, 30% of enterprises implementing distributed data architectures will have adopted data observability techniques to improve visibility over the state of their data landscape, up from less than 5% in 2023.”

Dynatrace Data Observability works with other core Dynatrace platform technologies, including Davis hypermodal AI combining predictive, causal, and generative AI capabilities, to provide data-driven teams with the following benefits:

Freshness: Helps ensure the data used for analytics and automation is up-to-date and timely and alerts to any issues—for example, out-of-stock inventory, changes in product pricing, and timestamp anomalies.

Volume: Monitors for unexpected increases, decreases, or gaps in data—for example, the number of reported customers using a particular service, which can indicate undetected issues.

Distribution: Monitors for patterns, deviations, or outliers from the expected way data values are spread in a dataset, which can signal issues in data collection or processing.

Schema: Tracks data structure and alerts on unexpected changes, such as new or deleted fields, to prevent unexpected outcomes like broken reports and dashboards.

Lineage: Delivers precise root-cause detail into the origins of data and what services it will impact downstream, helping teams proactively identify and resolve data issues before they impact users or customers.

Availability: Leverages the Dynatrace platform’s infrastructure observability capabilities to observe digital services’ usage of servers, networking, and storage, alerting on abnormalities such as downtime and latency, to provide a steady flow of data from these sources for healthy analytics and automation.

“Data quality and reliability are vital for organizations to perform, innovate, and comply with industry regulations,” said Bernd Greifeneder, CTO at Dynatrace.

“A valuable analytics solution must detect issues in the data that fuels analytics and automation as early as possible. Dynatrace OneAgent has always helped ensure that the data it collects is of the highest quality. By adding data observability capabilities to our unified and open platform, we’re enabling our customers to harness the power of data from more sources for more analytics and automation possibilities while maintaining the health of their data, without any extra tools.”