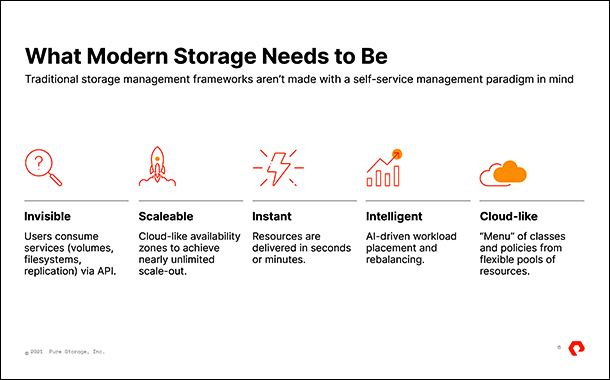

Successful enterprises tend to grow their businesses over time. As new workloads are added and their data grows, their storage performance and capacity requirements grow.

A typical legacy enterprise storage platform’s life cycle varies but is generally somewhere in the range of three to five years. Ultimately, the fixed storage performance of this legacy system no longer meets requirements, and the business is forced to perform a forklift upgrade to gain access to the newer technologies in controllers and storage media necessary to meet its requirement most cost effectively.

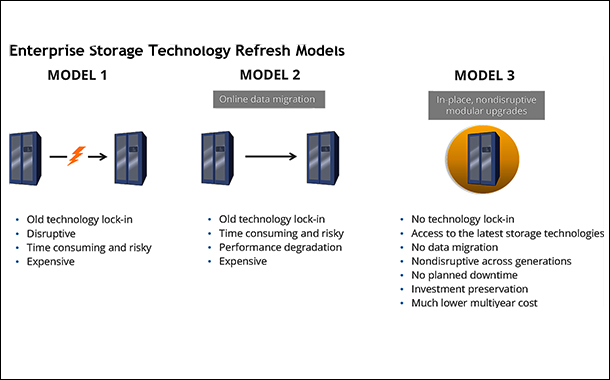

Purchasers of enterprise storage have historically dealt with an upgrade cycle that was expensive, disruptive, inherently risky, time consuming, and strongly driven by vendors’ technology refresh cycles and pricing.

Customers are locked into the limitations of that technology

The legacy enterprise storage upgrade cycle is familiar to most storage administrators. An enterprise purchases a new storage array, which includes a given storage capacity that may be expanded over the life of the product, but the maximum storage performance achievable by the system is fixed based on the capabilities of the controllers and the internal array bandwidth at the time the product is shipped. Regardless of how much capacity may be added over time, the maximum performance potential in terms of storage latency, throughput, and bandwidth does not increase.

Even if a business is not outgrowing the performance of its storage, media density, power consumption, and maintenance costs on older products may become sufficiently onerous to also drive a company to want to upgrade to newer technology. This cycle repeats over time.

The model locks customers into older technology. When a legacy enterprise storage array is developed, it can be designed to include the latest in controller, backplane, and storage media technology. Firmware and software upgrades over the life cycle of the product can provide incremental performance improvements, but customers are locked into the limitations of that technology as originally designed for the life of the product.

For example, much newer higher-performance and more efficient NVMe technologies cannot be most effectively used in legacy systems designed around SCSI. Although capacity can be added, often all drives can be only of the type available when the system was first purchased. Customers do not necessarily have access to major advancements that provide order of magnitude improvements in performance, storage density, and cost.

Forklift upgrades are disruptive. Moving to the next generation in controller, backplane, and storage media technology requires a completely redesigned array with typically much higher internal bandwidth to take full advantage of performance and density advancements in storage-related technologies. This means that a completely new array must be brought in to replace the existing array, and that often means downtime as well as data migration.

Application and data migration is time consuming and risky. During the upgrade, all the applications and data in the old array must be migrated to the new array. Today, even the smallest enterprises are dealing with at least tens of terabytes of data, and most are dealing with hundreds of terabytes and looking at managing petabytes of data soon if they are not already doing so. Even if data is migrated over high-performance networks such as Fibre Channel, migrating that much data can easily take days if not weeks or months for many enterprises.

Customers may also have extensive snapshot trees and replica libraries that will be lost if they cannot be migrated to the new system. Often, newer systems use a new higher-performance or more efficient on-disk format, so customers can incur conversion risk during the migration as well.

Upgrades are extremely costly. A customer must buy the new hardware and any required software as well as rebuy capacity. Generally, none of the hardware and software from the older array can be transferred to the new array, so all of the capital expenditure capex must be repeated even if the customer wants just the same basic features x amount of capacity, snapshot, and replication software.

As legacy systems near their performance thresholds, it becomes relatively more expensive to increase performance further

And then, to help this inherently risky process go more smoothly, many enterprises hire outside professional services firms to plan and execute the technology refresh, a decision that can easily add tens of thousands of dollars in services cost to what is already considerable capital expense.

Delaying upgrades may be even more costly. As legacy systems near their performance thresholds, it becomes relatively more expensive to increase performance further.

More older technology resources are required to meet increased requirements, relative to denser and higher-performance newer technology options. Added older technology resources can bring lower performance and capacity density, making it relatively more expensive to scale system capabilities with them more devices are needed, which consume more energy and floor space. Maintenance costs also often increase on older systems, providing an additional vendor-driven incentive for customers to look at upgrading to newer technology.

Excerpted from IDC White Paper on Evergreen//Forever Subscription Continues to Drive Industry-Leading Customer Experience as a Differentiator for Pure Storage by Eric Burgener.